Using GenAI to Code? Not So Fast

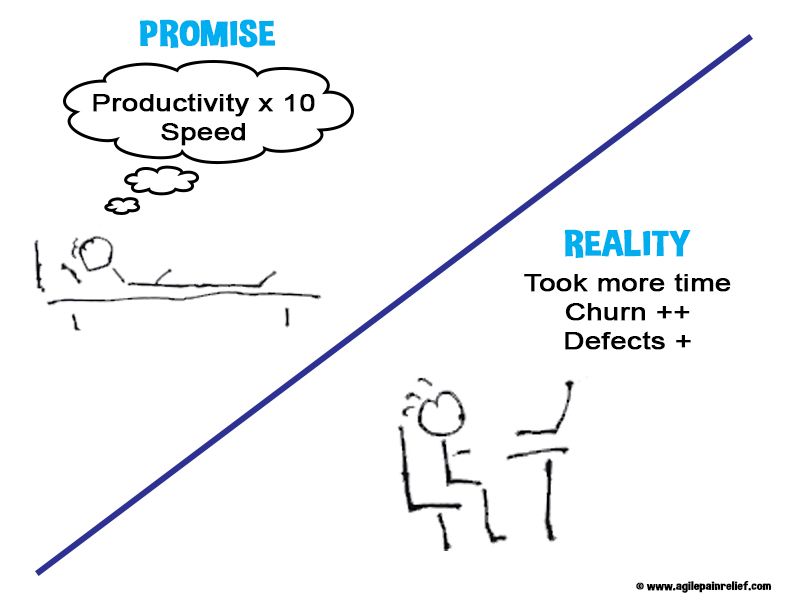

AI coding agents have been sold to us, making big promises of how much faster we will work using them. In our “The Real Cost of AI-Generated Code: It’s Not All It’s Cracked Up To Be” article, we showed that this speed is coming at a cost with increased Technical Debt and decreased maintainability. The Agile community has witnessed this many times over the years.

Aside from sharpening our skills, most things that promise to help us go faster come at cost. Now there is new research that calls into question even the speed-up of writing code with GenAI.

Read for Yourself and Decide

Summary and the full paper: Measuring the Impact of Early-2025 AI on Experienced Open-Source Developer Productivity.

Caveats: this is a single research paper which studied senior developers who contributed to open source projects. The codebases for the projects were large (over 1,000,000 lines of code), and the developers were familiar with their projects. Some effort was made to ensure that the projects had good-quality code in the first place. So the paper is studying a narrow slice of work, and the results might not generalize.

The researchers paid the participants to address outstanding issues in their projects. For each issue, they were told to use AI or no-AI. They were also asked to forecast how long the issue would take to fix. When allowed to use AI, the developers predicted they would go 25% faster than an unaided human. That’s not what happened. The AI-enabled developers took, on average, 19% longer to complete the task. Interestingly, even though they were notably slower than their non-AI aided colleagues, they perceived that they had worked 20% faster.

These results are contrary to what we see reported elsewhere.

- Some research has been done using either simple tasks like implementing a basic HTTP server (“The Impact of AI on Developer Productivity: Evidence from GitHub Copilot”) or synthetic tasks (“Significant Productivity Gains through Programming with Large Language Models”) - neither of which necessarily generalizes to the real world tasks.

- Other studies report on the number of lines of code generated UGH!! (“Sea Change in Software Development: Economic and Productivity Analysis of the AI-Powered Developer Lifecycle”), commits, or pull requests (“The Effects of Generative AI on High-Skilled Work: Evidence from Three Field Experiments with Software Developers’). Of course, none of this is correlated with high-quality code that meets the business needs.

- SWE and other benchmarks often make it seem like AI tools are good at solving a wide range of problems. However, these benchmarks have flaws. The only metric that matters to them is: Did the code pass the test? But the projects used for the benchmark have incomplete test cases. The generated code might fix the original issue but introduce a new bug. Worse, the code isn’t examined to see if it is readable or fits the established patterns of the code base.

- Much of what we’re seeing to support the value of GenAI tool is self-report data. As the above research study illustrates, the self-report data doesn’t necessarily correspond to reality! As an example, I perceived my last bike ride was 20% faster than the previous one, but the data sadly shows it was merely average.

- DORA report (State of DevOps) - The DORA 2024 report has missed the boat, measuring developers’ perceptions about AI. It found that they feel more productive and believe they have reduced complexity. But, as seen above, self-report data isn’t the most reliable when it comes to performance.

More caveats on the research paper:

- There is a risk that the paper’s methodology missed something important about real work (although this paper already has a better methodology than previous papers).

- Many of the developers hadn’t used Cursor before. Maybe with more practice they would have been faster.

- The study used senior developers. Maybe junior developers would gain more benefit from AI assistance. But then that leaves the question of how do junior developers grow and become senior? Will junior developers spend enough time reviewing the generated code, understand it, and verify that it’s a good fit?

- This is a single paper. Maybe a larger sample size or a diffent mix of people might have found a different result.

What this paper does is give me pause in accepting the statements that claim AI is magically improving developer productivity. For a concrete example of how AI coding tools struggle with real tasks, see the results of our AI Code Generation and the Tennis Kata experiment.

What would I like to see? I would like papers to look at additional questions not covered in this study:

- Was the code change a sensible size? Generally, we don’t want bug fixes to be large changes. When bug fixes are large, it introduces new risks.

- Did the AI generated code fit the idioms used in the project?

- Was the AI generated code readable?

- Did the AI avoid adding any new dependencies?

It is early days in our use of GenAI and software development. The models themselves will likely improve (example: context windows), and tool vendors will find ways to improve their tools so the LLM has more information about the codebase. I also know of some developers who are using a BDD style of development coupled with code generation, which might well pay off.

I hope we all learn to be more skeptical of promises about productivity increases without first examining the methodology underneath. (I, for one, have written off the DORA Reports as unreliable.) In our Certified ScrumMaster workshops, we focus on what actually helps teams deliver value, not promises of faster output.

Related Blog Entries

Mark Levison

Mark Levison has been helping Scrum teams and organizations with Agile, Scrum and Kanban style approaches since 2001. From certified scrum master training to custom Agile courses, he has helped well over 8,000 individuals, earning him respect and top rated reviews as one of the pioneers within the industry, as well as a raft of certifications from the ScrumAlliance. Mark has been a speaker at various Agile Conferences for more than 20 years, and is a published Scrum author with eBooks as well as articles on InfoQ.com, ScrumAlliance.org and AgileAlliance.org.