Stable Teams Really Do Matter

For years now Rally has been performing a large ongoing experiment on the Agile world. As a side effect of providing one of the better known tools they’ve managed to see a lot of data accumulate about what makes an effective Agile team. In a report called “The Impact of Agile Quantified” they’ve sanitized the data and then run some statistical analysis on it.

Here’s what they saw:

- Dedicating Team Members to one team doubles productivity, reduces variance throughput and leads to a small reduction in defects. They note that this is a fairly common practice among teams.

- Stable Teams – keeping a team intact for the long term resulted in 60% more productivity; teams were more predictable and responsive.

- Limiting Work In Progress – reduces defect rates; however, if your WIP limit is already low, reducing it further might affect productivity.

- Relative/Story Point Estimation[1] – They divide the world into teams that: A - Don’t estimate; B - Estimate Stories in hours; C - Estimate Stories in Points – tasks in hours, and D - teams that only Estimate using Story Points (i.e. teams that have dropped task hours). Their discovery – the teams (A) not using estimates had 2.5 times as many defects as the teams (C) using Story Points and Task hours. An additional surprise – teams (C) using Story Points and task hours had fewer defects than (D) teams only using Story Points. Some of the discoveries in this one could use further investigation.

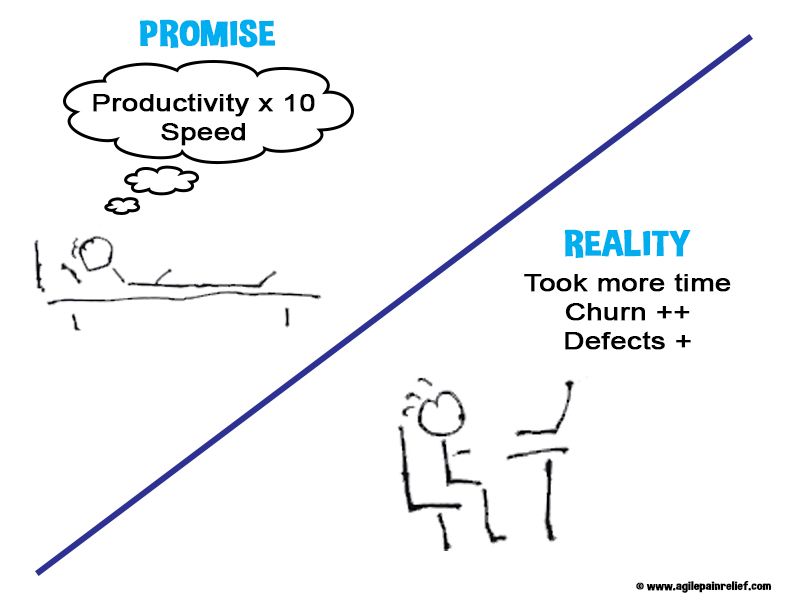

- Team Size – 7+/- 2 – the findings suggest that teams before the Agile norms are more productive, but have a higher defect rate and reduced predictability, whilst larger teams were more predictable. The authors note that the larger teams typically also used “Story Point Estimation for Stories and Hours for Tasks” – this might explain some of the productivity differences. The authors recommend sticking to the traditional recommendation of 5-9. Before switching all your teams to 3 people or less – which is tempting with the promise of more productivity – also consider the effect on the work if even one team member leaves. This is another datapoint that bears digging into.

I was surprised to find that stable teams are less frequent among the Rally customers than my own. Rally noticed that 1 in 4 people changed every 3 months. Experience at my regular clients suggests that it should be less than that. No matter what the frequency, we have to appreciate that every change is expensive; both in terms of the knowledge lost and the consequent slowdown while team members renegotiate their relationships. It’s hard to build the high performance teams that we all seek when we have frequently changing membership.

As with any set of measures, I think the value isn’t so much in the number as the signal regarding what to look at in our teams. In addition, I suspect some high performing teams will probably be doing things that don’t show up well in the larger dataset. For instance, I’ve seen many high performing teams with less WIP than the number of team members. Instead they swarm all work, etc.

The report from Rally is well worth reading, although it’s sixteen pages long. (You will have to give away your email address).

To my friends at Rally, there are many interesting questions to be asked here. If we look only at stable teams – what do we learn about team size? If we look only at mature teams (>1 yr old and stable) – do any of our discoveries around team size and estimation change? What about time to fix defects vs. productivity or quality? What about time to fix defects vs. team size? Story size vs. productivity vs. defects? Distributed teams’ productivity? What about the highest performing teams – what where they doing…? Have you considered releasing your dataset to the rest of the world so we can help you mine it? Two reasons: more eyes will spot more ideas and the Agile ideas have always been developed and evolved in an open fashion. Perhaps you could release with the rule that anything someone else discovers from it has to be shared openly.

Hat tip to Dave Nicolette who first pointed this paper out to me

[1]In the paper this is referred to “Full Scrum” – which is odd since Scrum doesn’t require Estimation at all.

Image attribution: Photodune.net

Mark Levison

Mark Levison has been helping Scrum teams and organizations with Agile, Scrum and Kanban style approaches since 2001. From certified scrum master training to custom Agile courses, he has helped well over 8,000 individuals, earning him respect and top rated reviews as one of the pioneers within the industry, as well as a raft of certifications from the ScrumAlliance. Mark has been a speaker at various Agile Conferences for more than 20 years, and is a published Scrum author with eBooks as well as articles on InfoQ.com, ScrumAlliance.org and AgileAlliance.org.