GenAI vs Human Intelligence - a Reality Check

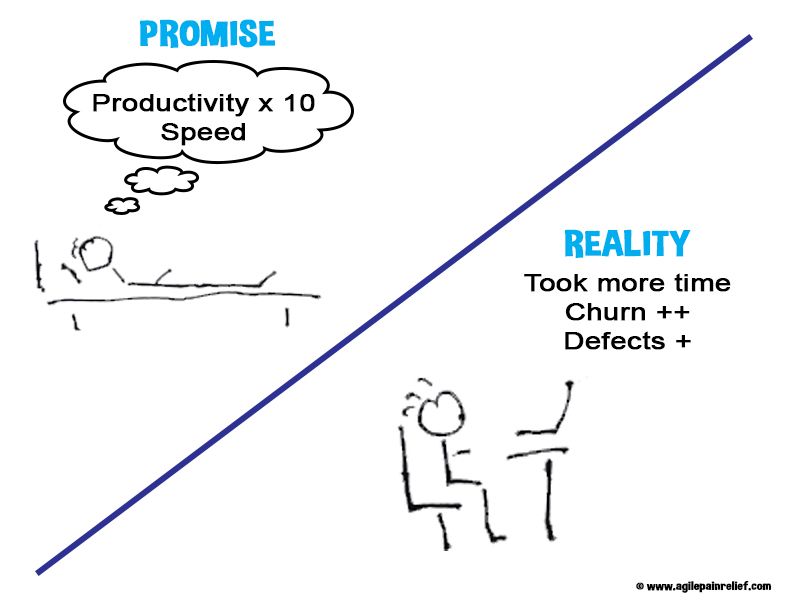

GenerativeAI is being pitched as a tool to replace software developers, human resource professionals, graphic designers, editors, radiologists, and many others. Leaders are mandating the use of AI without understanding what they’re asking. The language that is used to describe GenAI includes thinking, reasoning and learning, and we’ve been told many times that superintelligent AI is just around the corner. My late father used to say, “Marvin Minsky promised me AI would be coming in a generation.”1 Minsky was trying to develop intelligence that could compete with human intelligence. I’m still waiting.

The hype and language used to describe GenAI makes it difficult to understand what it is good at and what it can’t do. All Scrum teams I encounter are doing creative work and solving real problems. In theory, LLM tools can do much of our current work, but can they truly replace human creativity and thinking in the workplace?

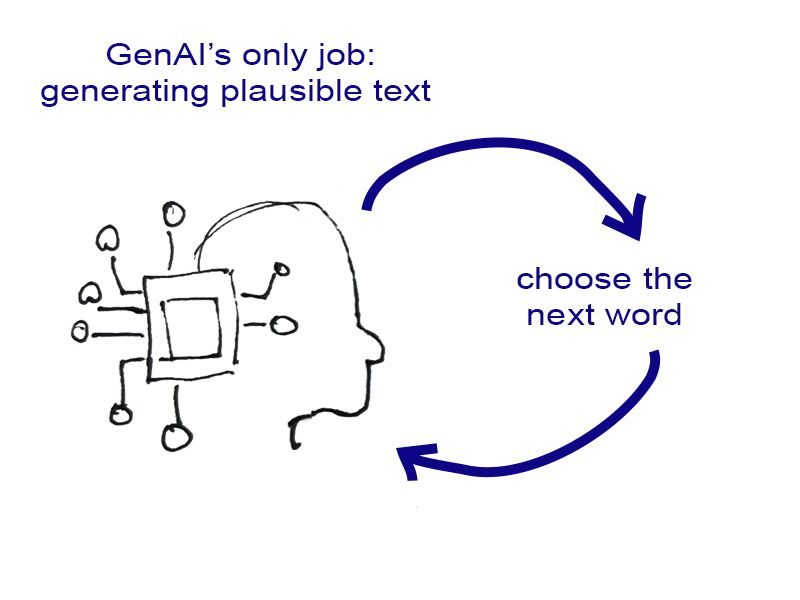

At its core, GenerativeAI takes the text you give it and rolls a giant set of dice to predict the next word in your sentence. It works fairly well because it has been trained on a large volume of text — effectively the entire internet and most of the world’s books. So let’s remember, there is no magic; these models are just prediction machines. When we see that GenAI is just a tool to generate a plausible response to our prompt, the following limitations can be understood.

Fundamental Limitations of GenAI:

- Originality - Under the right circumstances, you can get many GenAI models to spit out chunks of existing creative works, including JK Rowling’s “Harry Potter and the Philosopher’s Stone”2, but that’s just regurgitating human creativity and not being inherently creative on its own.

- Learning and Memory - Contrary to popular belief, the models don’t really learn from you. They can only draw on the data that is included in the training. Each chat with a model is a fresh start. Its memory is like a rolling window - once we get to the end, it drops the earliest part of the conversation. (OpenAI and other vendors have worked around this by saving key details from past conversations and inserting them at the beginning of each new conversation.) Worse, even with models that have large context windows, they suffer from recency bias.

- Thinking, Reasoning and Bias - Current GenAI models now have a reasoning mode, which makes them better at solving some problems. But it isn’t reasoning in the human sense; instead, it’s just pattern matching3. This can work well if the problem is close enough to what it was trained on, however it can go off the rails when the problem is a little bit outside its training. Additionally, models can amplify the biases found in their training data.

- Mistakes and Self-Explanation - If a child makes a mistake and we ask them how it happened, their explanation is based on the actual series of thoughts and events. If a model makes a mistake and we ask it to explain the error, the explanation is entirely made up. The model doesn’t have any self-awareness and it doesn’t understand what a mistake is, let alone that it made one. When asked, it will come up with a plausible explanation, because its job is to generate plausible text in response to a prompt.4 Even more important, the child will learn from the mistake and update their understanding of how the world works. AI can’t learn, because it doesn’t change. Once a model’s training is finished, it stops learning. So each conversation with it has the same knowledge as the last.

- Math - If we ask a child what 6x7 is, they will know the answer and understand how they got there. If we ask a model what 6x7 is, it will likely give the correct answer, but only because it was represented many times over in the training data. OpenAI and Anthropic have worked around this by providing the model’s access to a Python interpreter. They turn math problems into Python code. This works as long as the model recognizes that it is a math problem and translates correctly into Python code. (In the case of OpenAI ChatGPT5, the Python interpreter is hidden under the hood, so you can’t see what is going on.)

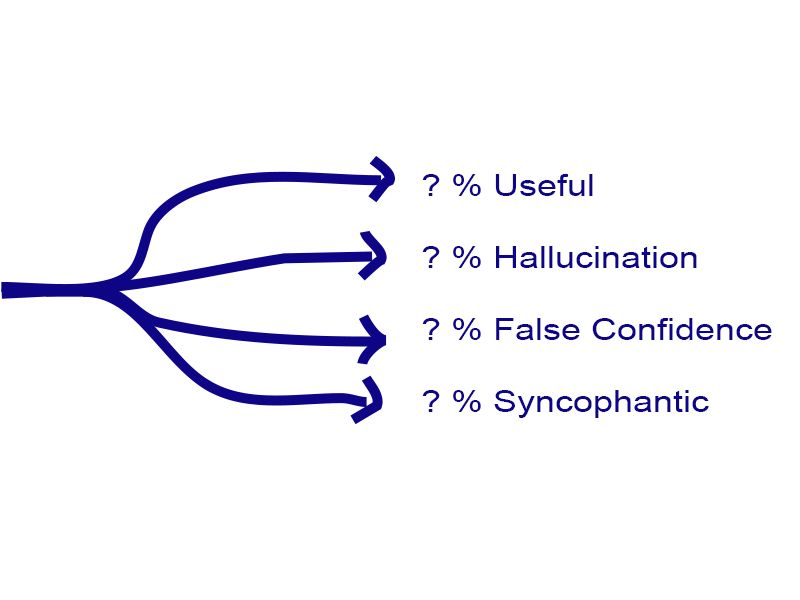

Output Quirks

- Hallucinations and Falsehoods - Because it is just generating plausible text, GenAI models sometimes spit out a result that seems correct on the surface, but isn’t. Worse, because it has been trained on the entire internet, a model will parrot back something it found on the internet, even if it isn’t true. It has no sense of truth; the model just picks the next most likely word in the sentence.

- Sycophantic - When asked to perform a task, models will often respond with a comment like “That’s a good question”5. When asked to critique my writing, I often get a compliment telling me that I’ve made a good point. Responses are what the tool predicts we want to hear; even if we think we’ve written a neutral, unbiased prompt, it often contains hints that a Large Language Model (LLM) interprets in its responses.4

- False Confidence - Since models have read the entire internet, and much of the writing on the internet sounds confident, the models have learned to sound confident in their phrasing. This is dangerous given the hallucinations and faslehoods previously mentioned. Worse, the model has no way of assessing how confident it should be so it doesn’t self-regulate its tone.

- Small changes in the prompt can lead to large changes in the response.

- Excessive - A model will often do far more than it was asked to do. Depending on the context, this can be helpful or harmful. If I’m asking the model to summarize reviews of several different products, doing too much can be okay but it does literally waste energy6. Sometimes, it can be helpful when the additional depth reveals something unexpected. But doing too much can also be harmful. When I’m getting the model to write code, it will often create hundreds of lines of code that are completely wrong and must be deleted. If I were getting the model to do something on my computer, it might delete files or take other irreversible actions. Even if you ask for brevity, it can still ramble on.

The above limitations and quirks aren’t just idiosyncrasies, they can be potentially quite damaging. Further, these problems are inherent to the current approach of AI development. That’s why caution and responsible use is so important, especially when it comes to sensitive data and privacy.

Privacy

When I tell something to a model like OpenAI’s ChatGPT, we need to think about privacy. Much of the focus is on ensuring your prompt isn’t used to train the model in the future. (That’s important, and most vendors have a toggle to allow you to turn this off.) However, even when it’s not used for training, vendors keep a log of your interactions, and their staff may have access to that log. This is a double-edged sword; on the one hand, being able to see your chat history can be helpful, on the other hand, we don’t know how secure these logs are. We should also ask whether AI vendors will resell our usage to advertisers7.

There are two approaches that I can currently see that have complete privacy: Apple’s Siri Intelligence and running the LLM on your computer. Siri’s Intelligence relies on models that run on Apple servers. They don’t keep any records of your Siri interaction. They even invited security experts to prove their promise of privacy. The downside is that the Apple models are only okay for most tasks on their own, and if you ask Siri to use ChatGPT, we’re back to the earlier privacy problem. Recent generation Macs with enough memory can run local models, some of which are very useful, and well-equipped Windows machines can also do this. In all cases, local models have excellent privacy ;-).

Remember

There is no magic; these models are just prediction machines.

What can we use GenAI for?

These tools aren’t human; anthropomorphizing them makes us think they are. When we anthropomorphize, it is harder to see what they’re good for and what they can’t do.

I limit my use of LLMs to:

- areas I know well enough to check the subtleties myself

- areas where correctness doesn’t matter (e.g. getting a list of ideas or critiquing my writing)

- answers grounded in search data, which I can access and double-check

I use LLMs every day. Where practical, I run them on my machine so I’m paying attention to the energy usage and I have privacy. They’re very useful at:

- Brainstorming - good for generating a long list of ideas quickly

- Critiquing writing or presentations

- Transcribing meetings and finding the tasks that were agreed to

- Summarizing longer pieces of text - often helps me see which academic papers are worth reading carefully. (I don’t assume the summary is right, it’s merely a way of finding a needle in a haystack.)

- Summarizing large quantities of customer interview data or support comments - gives me an idea of where to dig further

- Analyzing larger bodies of text to find patterns

- Helping me write code to automate a task

- Helping to write test cases

So with this understanding of the mechanics and limitations of LLMs, next time your manager tells you to use AI, you can ask them how many dice they want you to roll. :-)

One last challenge with any of these tools is that they don’t help us see when we’re asking the wrong question. For example, many teams focus on velocity as a meaningful metric. If we ask a LLM how to stabilize or improve our velocity, we will likely get advice that focuses on just that. Whereas an experienced human, and not AI, will consider all factors and, in this example, suggest that we’re putting the focus on the wrong place.

Ignore promises of Artificial General Intelligence (AGI) and Super Intelligence (better than AGI)8, there is no evidence to suggest that current approaches will get there. The hype hides the real utility of these tools. LLMs aren’t going to replace human creativity, thinking, and reasoning. They’re simply useful tools for speeding up some particular tasks (like meeting transcriptions and summaries) and for widening the range of ideas we’re considering. Understanding these limitations is essential for any Scrum team deciding how to use AI responsibly, something we explore in our Certified ScrumMaster workshops.

Image attribution: Agile Pain Relief Consulting (August 2025)

Footnotes

-

Speaking in 1967: “Within a generation … the problem of creating artificial intelligence will substantially be solved.” ↩

-

Measuring Copyright Risks of Large Language Models - it shows many models had a risk of parroting parts of JK Rowling’s Harry Potter and the Philosphers Stone ↩

-

Why it’s a mistake to ask chatbots about their mistakes ↩ ↩2

-

Perplexity CEO says its browser will track everything users do online to sell ‘hyper personalized’ ads ↩

-

AI Superintelligence by 2030: Sam Altman’s Bold Timeline & Risks ↩

Mark Levison

Mark Levison has been helping Scrum teams and organizations with Agile, Scrum and Kanban style approaches since 2001. From certified scrum master training to custom Agile courses, he has helped well over 8,000 individuals, earning him respect and top rated reviews as one of the pioneers within the industry, as well as a raft of certifications from the ScrumAlliance. Mark has been a speaker at various Agile Conferences for more than 20 years, and is a published Scrum author with eBooks as well as articles on InfoQ.com, ScrumAlliance.org and AgileAlliance.org.